Task-aware Distributed Source Coding under Dynamic Bandwidth

posted on November 28, 2023

By Po-han

Leveraging Neural Distributed Principal Component Analysis for Efficient Data Transmission

In today’s interconnected world, the constant exchange of data among various sensors and devices has never been more vital. The efficient transmission of the data presents several challenges:

- How do we define important features from a data distribution?

- How do we extend this to multiple data distributions or modalities settings?

- How do we compress and prioritize the features so that we can transmit the important features first to save network bandwidth?

To tackle these challenges, our collaborative work with researchers from the Honda Research Institute has given birth to a transformative solution known as Neural Distributed Principal Component Analysis, or NDPCA. In this blog, we will briefly cover the intricacies of NDPCA and its pivotal role in simplifying complex data transmission processes.

The Setting

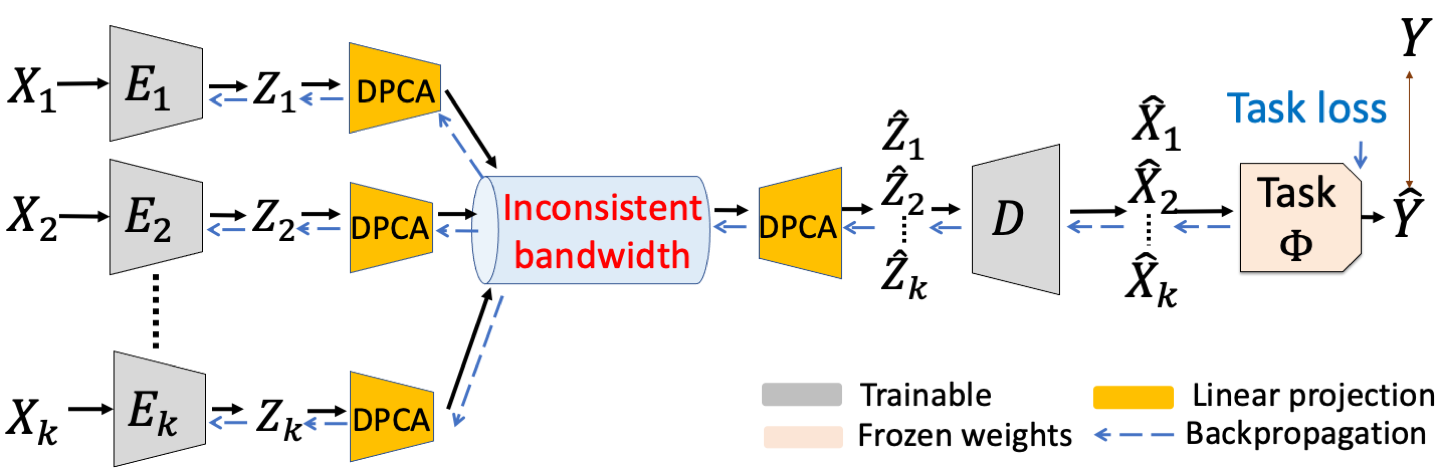

Here is our pipeline of NDPCA: each sensor independently compresses its data before transmitting it to a central node, where a decoder reconstructs all the data. This reconstructed data is then fed into a pre-trained machine-learning task model, which produces the desired output. It’s crucial to note that the quality of the final output hinges on compressing only the relevant features for the task at hand, and the overall performance is closely tied to available bandwidth.

For instance, the sensors are satellites compressing images of the Earth. The task model is a machine-learning model that can detect natural disasters from the images. The bandwidth is the communication channel between the satellites and the ground station.

The Challenge: Variable Bandwidth

In real-world scenarios, bandwidth availability can be unpredictable, with higher bandwidths typically yielding better task performance. To confront this challenge head-on, we design a novel framework capable of flexible data compression from multiple sources. This framework seamlessly adapts to varying bandwidth conditions while minimizing computing and storage overhead with a single model.

The Solution: NDPCA

Our solution, NDPCA, represents a breakthrough in this field. It comprises independent encoders and a joint decoder, facilitating efficient data compression and transmission. NDPCA’s standout feature is its adaptability to any available bandwidth using a single model, thereby simplifying computational and storage requirements significantly.

A Closer Look at NDPCA

Learning Low-Rank Task Representations

NDPCA harnesses the power of principal component analysis to learn low-rank task representations from the data. By focusing on the most essential features, the framework can efficiently compress the data without compromising task performance.

Distributed Compression

Each sensor in the network independently compresses its data using the learned low-rank task representations. This step ensures that only relevant information is transmitted, effectively reducing communication overhead between the sensors, which can be up to O(n^2).

Joint Decoding

At the central node, a joint decoder plays a pivotal role in reconstructing the compressed data from multiple sensors. This reconstructed data is then fed into the task model, ensuring that only the important task-relevant features are represented, ultimately leading to maintaining the task performance.

The Benefits and Significance

Adaptability to Varying Bandwidth

NDPCA can dynamically compress and transmit the most important features to match the available bandwidth, thereby maximizing the performance of the task model. This adaptability proves invaluable in real-world scenarios where bandwidth availability can fluctuate unpredictably.

Reduced Computing and Storage Overhead

By employing a single model and distributed compression, NDPCA significantly reduces the computational and storage requirements in comparison to traditional methods. This efficiency renders NDPCA viable even in resource-constrained environments.

Enhanced Task Performance

By compressing only the features that are pertinent to the task at hand, NDPCA ensures that the task model receives data of the highest quality. This, in turn, leads to improved overall performance and accuracy.

Conclusion

Our paper, titled “Task-aware Distributed Source Coding under Dynamic Bandwidth,” introduces NDPCA as a revolutionary solution to the challenges of data compression and communication in multi-sensor networks. By prioritizing the transmission of task-relevant features from multiple data sources and adapting to varying bandwidth conditions, NDPCA provides an efficient and flexible framework for optimizing data transmission. The significance of this work lies in its potential to enhance communication efficiency and improve task performance across a wide range of applications, from Internet of things devices to large-scale sensor networks.